Strengthening Austria's Innovation Capabilities and Research Excellence in Artificial Intelligence.

Background

Artificial Intelligence (AI) is a key technology of our time and expected to bring about profound changes on various levels. The potential for innovation and the range of applications are vast, spanning from medical diagnosis to autonomous vehicles, predictive maintenance, and climate protection through carbon tracking. However, the technology is evolving so fast that it has become very difficult to predict the consequences it entails and the disruptions it may cause.

To get a handle on it, the European Union is seeking ways to regulate AI (e.g., in the upcoming AI Act EU2023) and minimize potential risks that come along with it. As conventional methods of system verification have reached their limits with AI systems, new and robust technology risk assessment tools are urgently needed. Subjecting "high-risk" AI systems to a conformity check before placing them on the EU market is a focal point in the regulations drafted by the European Commission.

Challenges

Current AI systems and their engineering make the enforcement of existing safety and fundamental rights laws difficult. This is due to a variety of reasons, some of which are listed below:

- AI models have grown increasingly complex, compromising transparency and making it challenging to identify potential risks in their design.

- Another major concern are model-intrinsic issues, i.e., problems arising from the structure and opacity of the model itself.

- AI models rely on the inductivity principle and make generalizations based on specific examples. For instance, this can lead to stereotyping and to biases in the models and perpetuate prejudices.

- The risk of technical debt, or when quick solutions are preferred over effective ones, is frequently accepted.

- Human thinking processes (cognitive biases) as well as shortcomings in information exchange can have a negative impact on AI-assisted decision-making.

Each of these factors presents a challenge on its own, but their combined impact further complicates the situation and makes it difficult to gain a comprehensive understanding of the entire AI development cycle.

Goals

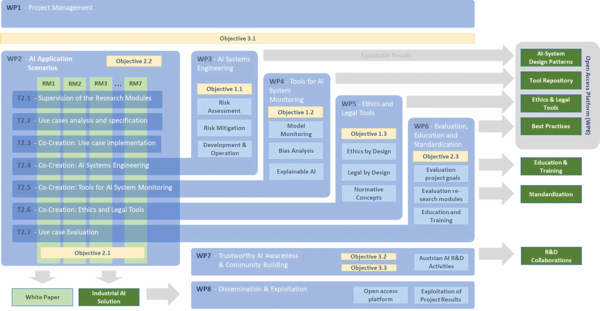

The overarching goals of this project include developing competencies and tools to better prepare the Austrian AI community and innovation ecosystem for upcoming regulations such as the EU AI Act. Simultaneously, the project intends to develop innovative solutions that promote sustainability and contribute to climate neutrality. We further place a strong emphasis on trustworthy AI and thereby address fairness, robustness, privacy protection, safety and security, accountability, and transparency in the development of AI solutions.

- Building Resilience to new Regulations: FAIR-AI's contributions aim to increase the Austrian AI community's resilience and strengthen its ability to anticipate future developments and regulations. The EU AI Act requires a risk management system to be established, implemented, documented, and maintained. We intend to meet these requirements and employ a multidisciplinary approach to arrive at viable solutions that are broadly applicable.

- Practicing and optimizing new research and development processes: Not only a theoretical understanding of the new regulations is needed; it also requires practical knowledge and hands-on experience to successfully implement them. To this end, we develop multiple research modules that offer guidance while also paving the way for innovative solutions that advance sustainability and address climate change.

- Boost Cooperation and Networks to Maintain Austria's Competitiveness as a Technology and Business Location: Many R&D companies and institutions are currently forming their own research teams to work on AI technologies. However, their focus may be constrained by specific requirements within their environment and a shortage of resources. In order to broaden their scope and give them a more thorough understanding, FAIRAI seeks to set up knowledge exchange networks.

Methods

The project takes a co-development approach. We define research modules, in which industry partners provide use cases and work together with research institutions to implement new solutions for them. In parallel, we develop approaches, methods and tools for trustworthy AI. Outcomes are assessed against project objectives, prepared for use in education and training, and made available via an open access platform.

Funding

You want to know more? Feel free to ask!

Head of

Media Computing Research Group

Institute of Creative\Media/Technologies

Department of Media and Digital Technologies

- AIT Austrian Institute of Technology GmbH

- JOANNEUM RESEARCH Forschungsgesellschaft mbh

- Fachhochschule Technikum Wien

- AW

- Technische Universität Wien

- Wirtschaftsuniversität Wien

- SCCH

- Austrian Standards International (ASI)

- eutema

- Research Institute

- Semantic Web Company

- Siemens

- Brantner

- FABASOFT

- SCHEIBER

- MeinDienstplan

- Dibit

- Messtechnik GmbH

- Onlim

- Austrian Standards

- Women in AI