Equipping devices for everyday use with voice interaction, fall detection and alerts as support in cases of emergency.

Background

As life expectancy rises and more seniors also aim to live independently, timely and reliable emergency detection is becoming increasingly important for ensuring both safety and autonomy. Emergency situations such falls are a particular concern: if help is delayed, they often lead to hospitalization, long-term injury, or even the loss of independent living. Although many solutions for emergency notification already exist—such as call buttons, wearable sensors, and video surveillance—each comes with significant drawbacks. Call buttons are frequently not worn or forgotten in an emergency, single-sensor approaches lack robustness, and constant video surveillance raises privacy concerns. These issues contribute to low acceptance among the very people who would benefit most.

Project Content

Smart Companion was created to address the challenges of fall detection in a new way. It adds lying-person detection and verbal communication capabilities to an off-the-shelf robot vacuum cleaner, combining an everyday utility with emergency assistive functions. During cleaning, the robot autonomously navigates the home while analyzing images recorded by its camera. If a lying person is detected, the integrated voice assistant attempts to establish verbal communication in order to assess whether help is required. In case of no response, or if the need for assistance is confirmed, emergency services are notified.

Building on the findings of Smart Companion 1, the current system was developed through a “Human Centered Design” (HCD) process, where the users’ needs and experiences are of pivotal importance. Verbal interaction preserves the user’s agency and acts as an error-correction mechanism. The user can dismiss the alert if no help is needed, but can also proactively request help if they cannot reach a phone or emergency button.

Supplementary search runs increase the monitoring frequency, image analysis is done entirely locally. Verbal commands enable the user to stop emergency communications and sent the robot to its docking station at any point. Scheduling and observation area are user-defined, providing a safety net without requiring special equipment or continuous video monitoring.

System and Development

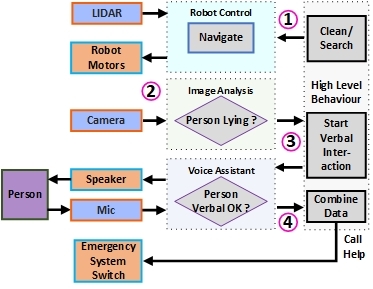

The System can be divided into four major components: Robot control and Hardware, Image analysis and Voice assistance and an overall behaviour control program. The figure below shows a system overview: During a cleaning or search run (1) the robot periodically monitors the user’s living area. During these runs, the robot records images (2). If its image analysis detects a lying person, it activates its voice assistant to communicate verbally with the person, to ascertain if assistance is actually required (3). This preserves the user’s control and prevents false alarms. If the user asks for help or does not respond, an emergency service is notified, receiving a picture of the lying person for assessing the situation further (4). Verbal communication also enables users to actively call for help, not having to wait until the robot finds them. The voice interface additionally enables users to operate the robot’s regular functions.

Robot Control and Hardware

The Smart Companion system builds on a commercial robot vacuum, which provides autonomous mobility at low cost. Equipped with Lidar and a front-facing camera, the robot maps and navigates indoor spaces using its built-in SLAM functionality. Through the open-source Valetudo interface, the system can send navigation commands, define cleaning or search runs, and restrict areas for privacy.

Image Processing

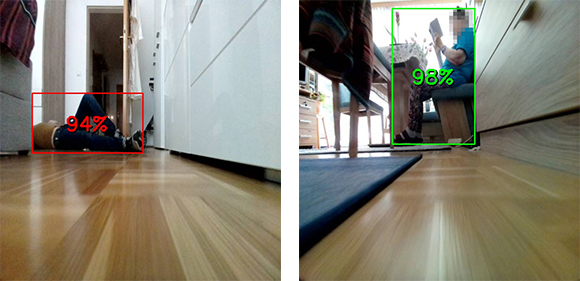

The robot’s onboard RGB camera captures images every two seconds during cleaning and search runs. To reduce redundant data, near-duplicate frames are filtered before analysis. The lying person detection is based on a the YOLOv8 convolutional neural network (CNN). The model’s classification head layer was replaced to introduce three new classes (“person”, “lying person”, “no person”), with the lower layers partially frozen to preserve generalizable features. It was fine-tuned on a combination of public and custom datasets (21k images), labeled for these classes, and evaluated on separate test sets (5k images). To meet the project’s requirements, the custom dataset includes images from the robot’s onboard camera, as this specific viewpoint is underrepresented in public datasets, particularly for lying individuals. Multi-view analysis increases reliability: detections are validated if multiple images or high-confidence scores confirm the same event. The figures below show the detection of a lying person (red bounding box) with a score of 0.92 and of a person (green bounding box) with a score of 0.98.

Voice Assistance

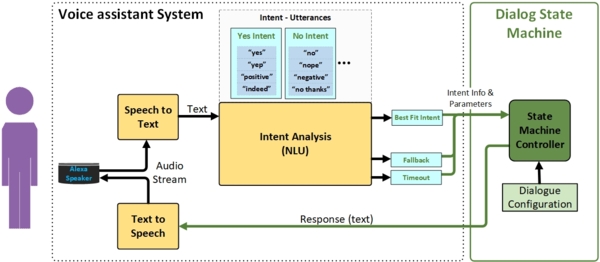

Verbal interaction is handled by an Amazon Echo Dot smart speaker, integrated with the Amazon Voice Service (AVS) cloud. Using the Alexa Skills Kit, utterances are transcribed to text, compared against predefined utterances. Utterances are grouped into intents to interpret their meaning (i.e., “help”, “I need assistance”, “call someone”, etc. are grouped into the “help” intent). These intents are processed by a custom Dialog State Machine, which structures the flow of conversations and adapts the system’s responses. The dialogue model distinguishes between expected, unexpected, and non-responses, enabling clarification prompts and repeat queries. This ensures robust communication even under stress or ambiguous input. Voice interaction maintains user agency by allowing them to dismiss false alarms, confirm emergencies, or proactively request assistance. The model contains 398 utterances grouped into 21 intents and over 40 dialog states.

Behavior State Machine

The Behavior State Machine coordinates the entire system by integrating information from navigation, image processing, and voice interaction. It defines the robot’s operational states (e.g., cleaning, searching, potential emergency, emergency mode) and governs transitions based on sensor input and user responses. It supervises component health, retries failed actions, and adapts behavior dynamically if deviations are detected for robust system control.

Results

After completing the user-centered design, the prototype underwent a field trial with six older adults who lived alone and independently. Each trial lasted one month, with participants aged between 60 and 80 years. At the start, the system was introduced, and personalized schedules and search monitoring areas were configured. Participants documented their experiences in daily logs, and interviews were conducted before and after the trial period. In addition, lying simulations were carried out to familiarize participants with the robot’s emergency procedures and to collect targeted feedback. Across all trials, more than 100k images were recorded. Together with user reports and automated system logs, this data was used to iteratively improve the dialog model, robot behavior, and CNN performance.

Across six months of deployment, the system demonstrated stable operation. Only a single false alarm occurred, caused by a misclassification when the user was not present. In both simulations and additional laboratory evaluations, multi-view lying detection proved robust and effective. Survey results at the conclusion of the trial showed positive evaluations: trust in lying detection averaged 4.8/5, trust in privacy protection 4.9/5, and perceived usefulness 5/5. Although limited in sample size, the study covered 184 days of continuous in-home testing with 208 search and cleaning runs, offering robust evidence that the system can operate reliably under real-world conditions. Taken together with the iterative, user-centered development, these findings indicate a high degree of both technical reliability and user acceptance.

Acknowledgements

The partners involved in the project benefit from its outcomes in many ways. St. Pölten UAS leads the European University Initiative E³UDRES² with several partner universities and sees Smart Companion as an important milestone for further international research on this topic. The Bosch AG strengthens its position as a European manufacturer of robot vacuum cleaners and smart home systems. The Akademie für Altersforschung am Haus der Barmherzigkeit is receives first-hand experience with assistance systems and provides valuable support in achieving high user-acceptance and high ethical standards. Arbeiter-Samariter-Bund Group Linz as a provider of Personal Emergency Response Systems urgently needs devices for passive fall detection, because such systems are currently not available on the market.

Funded by the „ICT of the future“ programme initiated by the Federal Ministry for Climate Action, Environment, Energy, Mobility, Innovation and Technology (BMK)

Publications

You want to know more? Feel free to ask!

Lecturer

Department of Media and Digital Technologies

- Robert Bosch AG (BOSCH), Wien

- Akademie für Altersforschung am Haus der Barmherzigkeit (AAF), Wien

- Arbeiter-Samariter-Bund Gruppe Linz (ASB), Linz